This proof of concept explored the intersection of galvanic skin response (GSR) and generative artificial intelligence (AI) through a tool that creates physiological-based visual content. To explore the concept of emotion-responsive generative artificial intelligence, the proof of concept utilized an Arduino MKR WiFi 1010, a Seeed Studio Grove GSR sensor, and a Raspberry Pi 4 Model B. The development of the program involved the use of Python and the OpenAI DALL-E API.

Bioadaptive generative AI for personalized visual content

Summary

Off-the-shelf hardware and sensors brings together physiological data and generative AI

In this proof of concept, galvanic skin response (GSR) represented changes in the skin’s electrical and sweat gland activity due to physiological arousal. Sensors typically placed on the skin measured it, often as part of a wearable device. Additionally, the proof of concept defined generative artificial intelligence (AI) as algorithms capable of creating new content, with a specific emphasis on image generation.

Working on this project, I gained an introductory understanding of API integration, explored embedded electronics, and identified areas of further exploration. The primary focus of the proof of concept was to communicate the potential of emerging technology to redefine content creation.

This process involved researching and gathering the necessary electronics components, collaborating with generative AI to create the appropriate Python script, and taking an iterative approach to prototype assembly. The completion of this project occurred from May 2023 to June 2023.

Role | Product design, Research

Timeline | May 2023 to June 2023

Components

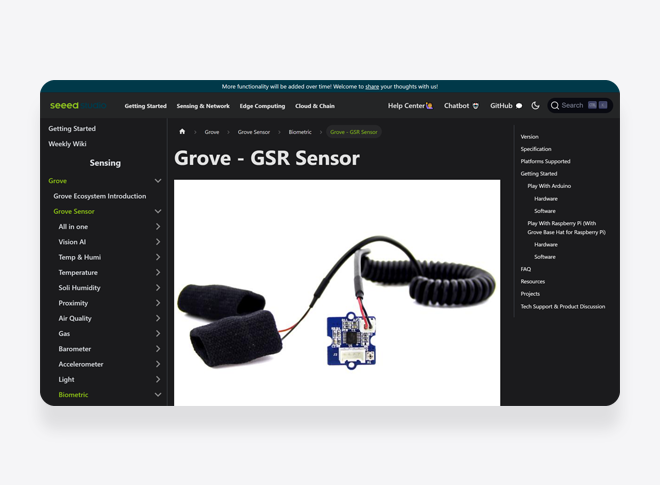

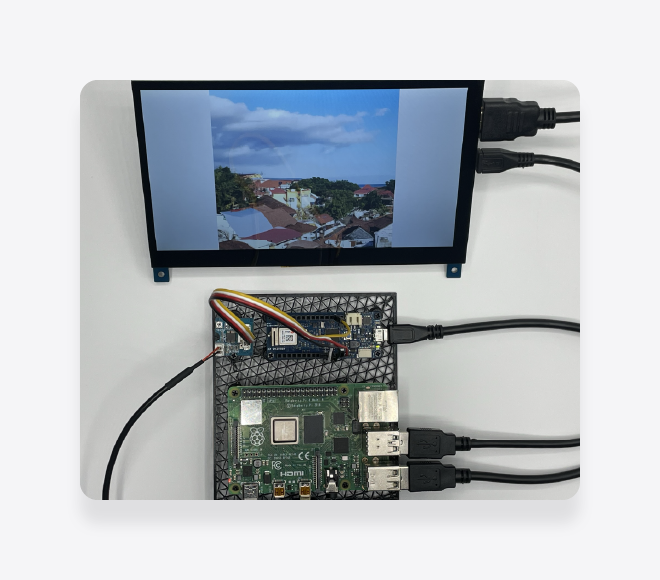

The proof of concept utilized off-the-shelf components. These included an Arduino MKR WiFi 1010, a Seeed Studio Grove GSR Sensor, and a Raspberry Pi 4 Model B along with necessary input and output devices such as a power supply, keyboard, and mouse.

The project also required a Grove – 4 pin Female to Grove 4 pin cable and a Micro USB cable. For viewing the generated images, the Raspberry Pi connected to a SunFounder Display via HDMI.

Arduino MKR WiFi 1010

In this proof of concept, the Arduino MKR WiFi 1010 functioned as a flexible microcontroller with the potential integration of WiFi connectivity.

The Arduino MKR WiFi 1010 communicated with the Raspberry Pi via serial and was also powered via the same serial connection.

View details of the Arduino MKR WiFi 1010 →

Grove GSR Sensor

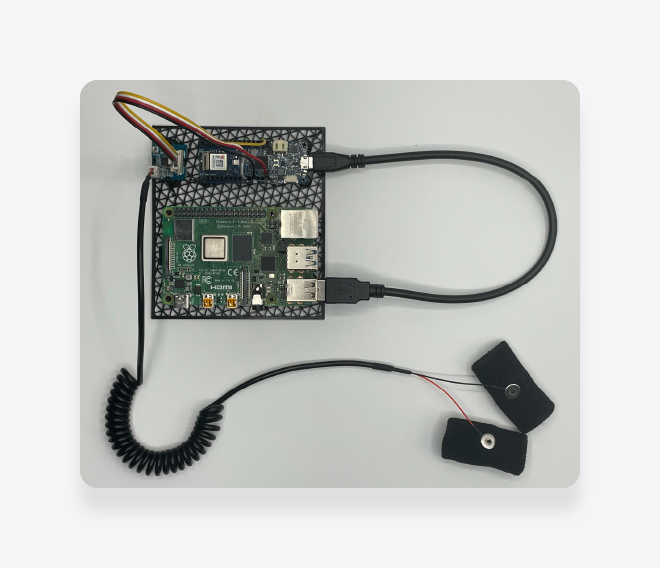

The Grove GSR Sensor by Seeed Studio played a pivotal role in this proof of concept. The sensor, with two electrodes attached to the fingers, actively tracked changes in the skin’s electrical conductance, providing valuable insights into the emotional and physiological state.

The Arduino MKR WiFi 1010 took data from the Grove GSR Sensor, processed it, and then sent it to the Raspberry Pi. This sequence established the sensor as a vital tool in projects focusing on interpreting and responding to emotional or physiological triggers. The quality of data that the sensor generated also received attention.

View details of the Grove GSR Sensor →

Raspberry Pi 4

The Raspberry Pi 4 Model B was essential to the hardware setup of this proof of concept. This compact single-board computer processed data from the Arduino MKR WiFi 1010 and supported the integration with the generative AI model through Python.

In this setup, Python served as the link between the Arduino and the OpenAI DALL-E API. Python code took the GSR data from the Arduino and sent it to the DALL-E API. The API then used its generative AI functions to produce distinct images based on this input.

View details of the Raspberry Pi 4 Model B →

OpenAI DALL-E API

The OpenAI DALL-E API was key to the software side of the proof of concept. This AI-driven platform, which produces images from text prompts, efficiently translated the prompts defined by the Python script based on the GSR data into corresponding visuals.

The DALL-E API generated images reflecting the individual’s physiological responses. Displayed on a monitor connected to the Raspberry Pi, these images presented a personalized depiction of the user’s physiological condition.

View details of the DALL-E API →

Code

As highlighted in the summary, the code generation for this proof of concept was a collaborative effort with OpenAI’s Chat GPT-4. The process consisted of two key elements: an Arduino sketch to read the data from the Seeed Studio Grove GSR sensor and a Python script to relay text prompt data to the OpenAI DALL-E API.

Reading the GSR sensor

const int GSR_PIN = A0; // The pin where the GSR sensor is connected

void setup() {

Serial.begin(9600); // Start serial communication at 9600 bps

}

void loop() {

int gsrValue = analogRead(GSR_PIN); // Read the value from the GSR sensor

Serial.println(gsrValue); // Send the value over the serial port

delay(1000); // Wait for a second before reading again

}

Generating images

import random

import openai

from tkinter import *

from PIL import Image

from PIL import ImageTk

import requests

from io import BytesIO

import os

import openai

# Set the OpenAI API key

openai.api_key = os.getenv('OPENAI_API_KEY')

# Prepare the Tkinter window

root = Tk()

root.attributes('-fullscreen', True) # Fullscreen

label = Label(root) # Create a label to display the image

label.pack()

def create_image(gsr_value):

# Map GSR value to image descriptions

if gsr_value < 300: # These thresholds are arbitrary, adjust as needed

prompts = ["A peaceful and calming scenery", "A serene landscape with a flowing river",

"A beautiful sunset over a tranquil beach", "A zen garden with blooming flowers"]

elif gsr_value < 500:

prompts = ["A slightly active city scene", "A small town bustling with activity",

"People enjoying a vibrant outdoor market", "A lively street with shops and cafes"]

elif gsr_value < 700:

prompts = ["A busy and bustling city", "A city with high traffic and skyscrapers",

"A city skyline with illuminated buildings", "People walking in a vibrant urban setting"]

else:

prompts = ["A chaotic scene with a storm", "A city in the midst of a thunderstorm",

"A dramatic lightning storm over the city", "Dark clouds and heavy rain in the urban landscape"]

# Select a random prompt based on GSR value

prompt = random.choice(prompts)

# Make a call to OpenAI API

response = openai.Image.create(

prompt=prompt,

n=1,

size="1024x1024"

)

# Extract image URL from the response

image_url = response['data'][0]['url']

# Get image data from the URL

response = requests.get(image_url)

# Open the image with PIL and create a PhotoImage object for Tkinter

image = Image.open(BytesIO(response.Sample Images

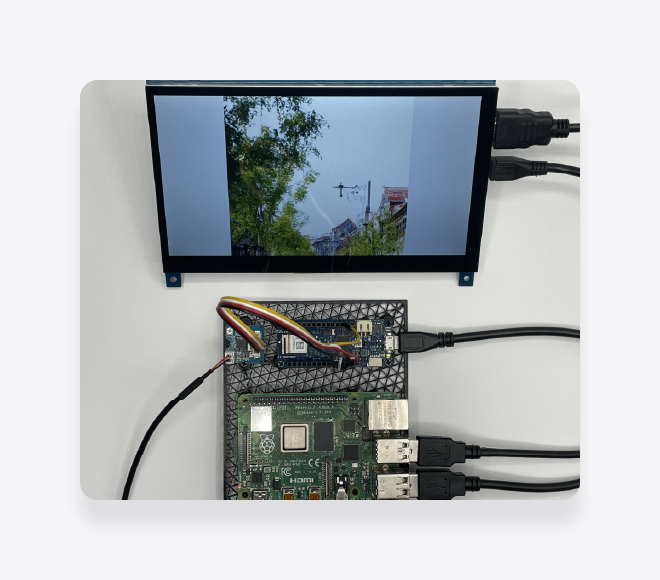

The proof of concept generated a variety of images reflecting physiological conditions as measured by the GSR sensor. When the sensor detected low levels of arousal, the python script sent a prompt to the OpenAI DALL-E API which generated calming scenes such as tranquil beaches, serene landscapes, and peaceful gardens based on the random prompt within each data threshold.

As the arousal levels increased, the generated images evolved to depict busier scenes, ranging from bustling towns and vibrant markets to high-traffic cities with towering skyscrapers. At the highest arousal levels, the generated images depicted chaotic and stormy environments, signifying elevated physiological conditions.

These sample images effectively demonstrated the first iteration of the system with the capabilities to translate physiological data into personalized content.

Closing Thoughts

This proof of concept demonstrates how emotion-responsive generative AI can create personalized visual content. Enhancements target real-time response improvement. Better sensors could increase physiological data detection accuracy. Adding sensors for humidity, temperature, proximity, light color, and light intensity could widen the scope of interpretable physiological responses.

In digital media, using emotion-responsive generative AI in music, video, and images opens new possibilities. Combining this technology with emerging fields like augmented reality and virtual reality also presents potential opportunities.

Beyond digital media, another project worth exploring centers on the idea of health-responsive generative AI with a focus on nutritional health and meal planning.